This is part III of a three part series. In Part I, I focused on how and why we should look at Government as a Platform, and why it could be beneficial to Madison to think that way. In Part II, I looked at how Madison is and is not participating in the Government as a Platform movement, and what we can do increase our participation. In Part III, I’d like to look at some ideas on bringing modern technology to civic applications. Some of them will be ways to directly improve people’s lives, others are more internal process improvements, which would make for a more efficient and transparent government. Some of the projects I’ve suggested in Parts I and II, and others are new. None of these are fully detailed proposals – they’re just broad strokes of what I’d like to see.

An Improved Legistar

I discussed this in Part II, but our legislation tracking system is not good. Besides being clunky, ugly, and slow, it’s just stuck in 1999-thinking. The Internet is all about connections, and Legistar doesn’t have any. It’s not enough to just remember what City Staff sticks on the page: if I’m a citizen and I want to know what’s going on with an issue, I don’t want to search the entire Internet, I want to find it all in one place. What newspaper stories have been written about this issue, or blog posts? What is or was Twitter saying about it? Where are budget numbers, and what happens to those budgets if the assumptions change – let me see that it real time: give me an embedded spreadsheet, and let me see what happens if numbers change. Has this been discussed at meetings, and if they are recorded, can I jump right to the time offset in the video stream? Let me give feedback directly, and let me give feedback on feedback.

It’s important, of course, to maintain some control over the page, so while I might push Legistar more towards Wikipedia, we wouldn’t throw open everything for anyone to change, but a static page that no one can add anything to is not taking advantage of any sort of community.

There are systems out there that already do part of this – can we switch to them? If not now, then at some point in Madison’s future – 5 years, 10 years, 50 years – I promise we won’t be using Legistar anymore. How will we get our data out of Legistar and on to our next system?

Development Proposal Review System

The Economic Development Committee is preparing a set of recommendations to the City in how it can improve the Development Review Process – that is, when someone wants to build a new building in Madison, how does that process work, from when someone wakes up and says “You know what this corner needs?” to when the developer floats the idea by the neighborhood and city staff to when the final designs are brought before the Plan Commission, and even to 6 months after the building opens and we go and we visit the building, and see how close are the designs, the traffic patterns, the noise, and so forth to what was actually proposed and planned?

The City has very good data on what happens to projects that actually file building plans with the city and ask for approval – the so called “Application” phase. What the city doesn’t know so much about is what happens to projects that don’t get to the formal application process, but are in the “Pre-Application” phase. This can be as simple as a property owner going down to city hall and asking “I own a vacant lot, can I build a small building on it” to Curt Brink’s 27 story, $250 million Archipelago Village proposal.

Some of them never move forward, but we’d like to have a better handle on what people even suggest, so we can track it, and help it along. Currently, all of this is ad-hoc: it might be a suggestion that goes to an Alder, or city staff, or a trade group, but there is no one place where this information is recorded.

The EDC is likely to include in its recommendations that the city adopt a web-based registration system to track these proposals. This is useful at all stages of the project lifecycle: when the proposal is just a twinkle in the eye of a developer, or some concepts that can start being shared with the community, to the formal application process with the city. By registering early, we can track the project as it evolves, share information, and be sure that everyone who needs to be notified is notified.

This is the sort of thing that we might look to Civic Commons to find a solution to, but if not, it might be a perfect application to suggest to Code For America – it’s already in line with one of their “core problem projects” they’d like to address in 2012. It’s unlikely that we could count on the hacker community to step up and build this system for us out of their own generosity – it’s too complex, and as most software projects that the community develops as open-source stem from some sort of itch they want to scratch, I don’t hold out much hope of something emerging.

If we’re going to spend money on building a development proposal tracking system, let’s get some of the best people in the world to build it for us, and let’s make it the most innovative, user-friendly, and powerful system in the country. Few things would do more to dispel the myth that Madison is not friendly for business than making the system for business interaction a flagship development.

ReportAProblem, 2.0

I also wrote about this in Part II, but we can do better with ReportAProblem. This would be two-fold: a better front-end and a more advanced middleware/backend.

On the front-end, we should have more than a web front-end. Let me upload pictures, or add a voice description of what the problem is. Put this all in an iPhone app- something that knows where I am, so it can automatically populate the geographic fields and knows who I am.

The back-end is where it gets cool. First, even if the city never updates ReportAProblem, we can build a new ReportAProblem that simply proxies reports into ReportAProblem: Users fill out report on BetterReportAProblem.com, which turns around and submits a report to cityofmadison.com – but now, we have an API we can build on top of.

With a middleware, we can do automated follow-ups – has this issue been resolved? Do you say it’s resolved, or only the city? For the city, the new backend can identify duplicate reports, or at least suggest the probability that it’s a duplicate report, and perhaps tell the user as soon as the problem is reported that the city is already aware of it and expects to have it resolved within X hours.

With a set of semi-trusted people, like the local alders or neighborhood association people, if the report is incomplete or ambiguous, can it be cleaned up? (I saw the spreadsheet of snow issues reports a few years back: Many people have problems with putting the right information into the right place in a web form.) With some crowdsourcing, we can clean it up and apply local knowledge to make the report more clear. With a smart phone app, we can let people report the problem over the phone, and use say Twilio’s automated transcription feature to make reporting simple, but still try to automatically route the report. This means literally just dialing a number, and saying “I see X, Y, and Z as a problem”, and being done, but without requiring a poor human to listen to and type everything in. (Even if voice transcription is a bit flakey now, it’s only getting better, and a human can always listen if the transcription comes back nonsense).

SeeClickFix or other projects might do much of this, or we can build our own. At any rate, a single web form with an HTTP post is pretty cool for 1999, but we can do better today.

Some sort of app with the lakes.

I don’t have any particularly great ideas here, but it seems that we can and should be doing something to help our lakes- one of the greatest assets to the high quality of life in Madison. IBM’s Creek Watch could be a model project.

This is an example of what’s called “Citizens as Sensors” and drawing insights from data collectively gathered.

A computable version of Madison Measures

Madison Measures is a giant compendium of statistics about Madison, full of spreadsheets, graphs, tables, and footnoted references. Unfortunately, it’s published as a giant PDF and is really meant to be printed, not computed.

Publishing online does not mean take the paper version and put it online. Let’s publish this on the web the right way –as a document or web site with structured, linkable, and computable data. Include the spreadsheets and raw data behind the graphs. Let me drill down into the data, and split it up over census tracts to see how all parts of our community is really doing. Build Madison Measures so that it is updated in realtime, not once a year. I’m not interested in how we did last year, I want to know how we’re going to do this year.

Our own version of Google Fiber.

I’ve written extensively about Google Fiber in the past, but there’s something I want to repeat here. I think it’s unlikely that Madison will get Gigabit fiber from Google. I’m not trying to be down on Madison, and if I was Google, I’d say “of course Madison is the perfect city for our experiment”. However, since I don’t know what Google’s criteria for evaluating cities might be, I’m just going to assume that we’re no better than anywhere else and we’re all equally likely – which means we have a 1 out of 1,100 chance, or a 0.09% chance of winning. We may see an announcement any day now, and while I’m hopeful, we should be thinking about what happens in the likely event Google goes somewhere else.

As part of the application, we put together a long list of endorsements and reasons why gigabit fiber would be a game changer in Madison. Do we really believe that? And if we really do believe it, then let’s make it happen, with or without Google.

This is obviously a far longer subject than can be addressed here, but let’s put together a MadFiber 2.0 group, and start thinking about how we can get gigabit fiber to Madison residents. I don’t know what the answer is – maybe it’s do nothing, because the Google Fiber project will spur industry competition and be a watershed moment, much like Gmail’s gigabyte of storage forced everyone to compete, or Google Maps forced every other map site to step up its game, and soon every home will be connected to gigabit fiber, no matter who your provider is. Or, maybe we look at other technology – a wireless mesh network, or partner with MG&E to deploy smart meters and piggyback high-speed networking on top of their network. Or, maybe we start small: build an experimental network along the East Washington corridor, using the existing conduit and as an incentive to begin its redevelopment.

I know this would be hard, but I’d hate to see the energy around the bringing gigabit to Madison be dashed just because Google decided to help someone else. Google even encourages cities to continue the push, and we should.

Implement the NYTimes Districts API with enhancements.

This is a little geeky, but the New York Times has an API that tells you the political subdivisions (voting wards, city council districts, state assembly districts, congressional districts, etc) that a given Lat/Long coordinates fall into.

This is useful for people who want to build government engagement sites and services, or hyperlocal news sites. SeeClickFix could use it to discover who the Alder of the location where you’re about to submit a problem report, or Madison.com could use it to identify stories that involve your county supervisor. The API is actually fairly simple, and the challenging part is to track down all of the data and load it into the system.

All of these applications are linked together: Remember, Government as a Platform. The District API is a building block for other applications.

A Madison Neighborhoods API.

The City of Madison recognizes about 120 neighborhoods or neighborhood associations. Keeping track of them all is hard. Imagine a hybrid Wiki with API to help. With an smart phone app, we could make it easier to people in the neighborhood to add data about their neighborhood – instead of SeeClickFix to report a problem, SeeClickCelebrate to mark neighborhood businesses, landmarks, schools, parks, trails, and anything else of interest – just pull out the iPhone, take a picture, and then a one-button click to say what it is. GPS remembers where it is, and later people can edit it online to fill in more details, or a web service searches a database to find the details. Using data from the Assessor’s office and Dane County Land Information Office, many of the details are already known. If we can, integrate into ELAM and traffic engineering, so it is easy to find out what’s changing in the neighborhood.

The neighborhood API could help people get in touch with what’s going on in their neighborhoods – there is no one single list of every neighborhood email list, though most have them as a Yahoo group or something similar. It was years before I learned how to get on my neighborhood mailing list, and it wasn’t because of the city that I figured it out. And API, and collecting the necessary data to make the API useful would be beneficial to all Madison residents.

As an interesting social experiment, subscribe a bot to every single neighborhood list, and track the flow of content between neighborhoods. As someone who’s on multiple lists, I know that sometimes things from one list get forwarded to another list. I’m interested to know, just what does that social graph look like? What neighborhoods stay in good contact? What neighborhoods don’t find out about civic information? What gets forwarded between neighborhoods, and what seems to be of interest only to one group? From what’s been passed between groups, can we use Machine Learning to predict if a new message should be forwarded between groups?

Right now, the Madison neighborhood page is not very dynamic. With more data, and a plan for how it can easily be kept up to date, we can engage more of the city.

Hacking the Public Library

This would be such a huge post that I’m going to come back to it later, but I think there’s no more exciting target for civic hacking than the public library. It holds a ton of interesting data, and as we migrate away from the library as a temple to books and towards a community information agency, both for the dissemination and production and curation of information, there will be countless opportunities to improve the system.

I am happy to say that when I started doing research for this post, I was pleasantly surprised at how much better the library’s online presence was than I expected.

For now though, just two interesting links – Jon Udell on “Remixing the Library” – his abstract:

In an online world of small pieces loosely joined, librarians are among the most well qualified and highly motivated joiners of those pieces. Library patrons, meanwhile, are in transition. Once mainly consumers of information, they are now, on the two-way web, becoming producers too. Can libraries function not only as centers of consumption, but also as centers of production?

Also, a podcast of Udell and Beth Jefferson on software to “transform public libraries’ online catalogs into environments for social discovery of resources that are cataloged not only by librarians, but also by patrons.”

Data Mashup for an Energy Efficiency study.

Take two cool websites – first, the City Assessor’s property information lookup. It will tell you the square footage of nearly any property in Madison.

As a second website, take MG&E’s Average Energy Use and Cost for Residential Addresses – here you enter an address, and MG&E tells you the maximum and average power bill. It’s very handy before you rent a new apartment – is this place going to cost a fortune to heat in the winter?

Now, imagine joining them together, and taking average energy bills divided by square footage as a rough estimate of energy efficiency. Plot that as a heatmap for the city. Any predictions for parts of the city that are more energy efficient? Less energy efficient? If we overlaid that with census tracts to look at socioeconomic figures, would we be surprised?

The pain is that the data is “open”, but not easy to use. You’d have to feed in addresses and screenscrape it all out. Again, an API would be great, or bulk data, especially from the assessor’s data.

Google Streetview Data with Open Access

I’m a big fan of Google Street View, but I wish that the data behind it was more accessible and updatable. We should also be using it more in civic processes – when the Plan Commission discusses a site, a Street View or Google Earth view of the area should be on the wall while they’re discussing it, and they should be able to move between it.

From an open data standpoint, more and more geolocation data is coming from public sources. OpenStreetMap is like a wikipedia for map data, and has a better license for using the data – if you build an application on top of Google Maps, Google reserves the right to put advertisement into the maps. The plus of OpenStreetMaps is that we can update data as fast as we can contribute new data, and not be limited by the whims of Google.

In time, OpenStreetMap will incorporate crowd-sourced StreetView data, and provide API and website access to that data. Many city vehicles already drive Madison streets every day – we probably cover the 80% of the city every day, and 100% of the city every month. Cameras are continually dropping in price, and hackers are already putting together Do-it-yourself streetview cameras? Can we outfit a few city vehicles with these rigs and collect data ourselves?

This would be useful for planning purposes – as soon as the built environment changes, we’d have the latest data. We can see how things change over time, and look back at “historic” views. As the seasons change, we can switch out imagery – if Street View is used by GPS apps to help you locate businesses, how the storefront looks in winter when the leaves are gone from trees matters.

Citizens as Sensors for Madison Metro Buses.

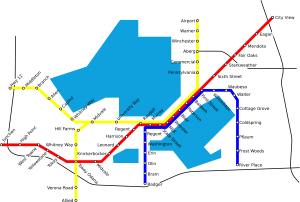

One of the coolest “big ideas” for Madison is the “M” – The Metro Mover Bus Rapid Transit system. The brainchild of former Madison transit planner Michael Cechvala and the rest of the Madison Area Bus Advocates, it’s a system of bus routes that are designed to move through the city quickly. It achieves this by straightening out bus routes, limiting the number of stops, and adding traffic signal bypasses so time spent at red lights is minimized.

In talking with Michael, he estimates that delay at bus stops and delays at traffic signals are about equal and combined consume about 40% of the travel time. He doesn’t know if Madison Metro has this data exactly, but he’s been putting it together for Seattle, and he does it the old-fashioned way: riding the bus and writing down in a notebook when the bus stops, why it stopped, and when it starts again. We can do better.

Smart Phones have GPS and accelerometers, so they can tell when they are and how fast they’re moving. (It’s helpful to have both, but technically you can get by with just one or the other.) You don’t even need a smart phone – most GPS trackers could measure the time it takes to get from Point A to Point B, and tell you exactly how many times you were stopped, where those stops were, and for how long you were stopped. What they can’t tell you is why you were stopped. That’s where the smart phone comes in handy: imagine an app that when you’re stopped, asked you to press one button if you’re stopped for a light, and another button if you’re stopped for people getting on or off the bus.

Even better, we don’t need everyone to do all of this. Because the phone or dedicated GPS device can record a GPS track along with the length of stops, if we’re stopped at or close to a point that has been labeled as a stoplight in the past, we can, with fairly high probability, predict that the reason we’re stopped there this time is again a stop sign. Same thing with bus stops.

With better data, we can make more accurate predictions as for how long Metro routes would be, and how cost effective traffic signal bypass systems could be. We can take tracks of real, actual bus routes, and use them as the basis for simulations where we remove different stops or bypass certain traffic lights. With a little effort, we could build an app that records and optionally labels this data. Some people would collect this data altruistically, and if need be, Metro could offer some incentives – say a $10 coupon to an area business if 20 labeled trips were “reported” by the app or if 50 unlabeled trips were reported by the app. (The labeled trips being the more valuable, because we can use them to predict what the unlabeled data should be) This is another example of citizens as sensors, and is a low-barrier way for people to positively contribute to Madison. If the data would be useful to Madison Metro, we should create this app.

For some other ideas of possible apps, see the Apps for Democracy contest from Washington, DC

This set of posts doesn’t really have a conclusion, due in no small part to my being sick of writing, but also because I don’t think this is the end of the process. The main goal was to just plant some ideas about what we could do together in this space, and to provide some background. If we decide to pursue a Code for America application, it might be helpful as a starting point for a discussion.

Look for more in the future, in hopefully shorter to read packages!